TL;DR: We take inspiration from arithmetic and present HINT, a new benchmark for studying systematic generalization of perception, syntax, and semantics.

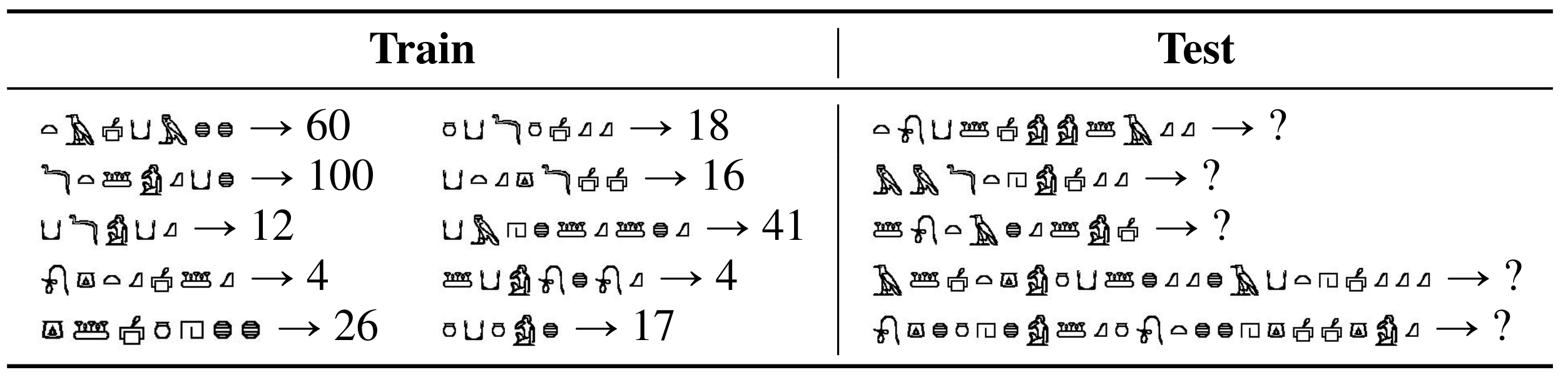

Inspired by humans' exceptional ability to master arithmetic and generalize to new problems, we present a new dataset, HINT, to examine machines' capability of learning generalizable concepts at three levels: perception, syntax, and semantics. In HINT, machines are tasked with learning how concepts are perceived from raw signals such as images (i.e., perception), how multiple concepts are structurally combined to form a valid expression (i.e., syntax), and how concepts are realized to afford various reasoning tasks (i.e., semantics), all in a weakly supervised manner. Focusing on systematic generalization, we carefully design a five-fold test set to evaluate both the interpolation and the extrapolation of learned concepts w.r.t the three levels. Further, we design a few-shot learning split to determine whether or not models can rapidly learn new concepts and generalize them to more complex scenarios.

To comprehend existing models' limitations, we undertake extensive experiments with various sequence-to-sequence models, including RNNs, Transformers, and GPT-3 (with the chain of thought prompting). The results indicate that current models struggle to extrapolate to long-range syntactic dependency and semantics. Models exhibit a considerable gap toward human-level generalization when evaluated with new concepts in a few-shot setting. Moreover, we discover that it is infeasible to solve HINT by merely scaling up the dataset and the model size; this strategy contributes little to the extrapolation of syntax and semantics. Finally, in zero-shot GPT-3 experiments, the chain of thought prompting exhibits impressive results and significantly boosts the test accuracy. We believe the HINT dataset and the experimental findings are of great interest to the learning community on systematic generalization.

@inproceedings{li2023hint,

title={A Minimalist Dataset for Systematic Generalization of Perception, Syntax, and Semantics},

author={Li, Qing and Huang, Siyuan and Hong, Yining and Zhu, Yixin and Wu, Ying Nian and Zhu, Song-Chun},

booktitle={International Conference on Learning Representations (ICLR)},

year={2023}

}